Guitar tablature transcription (GTT) aims at automatically generating symbolic representations from real solo guitar performances. Due to its applications in education and musicology, GTT has gained traction in recent years. However, GTT robustness has been limited due to the small size of available datasets. Researchers have recently used synthetic data that simulates guitar performances using pre-recorded or computer-generated tones and can be automatically generated at large scales. The present study complements these efforts by demonstrating that GTT robustness can be improved by including synthetic training data created using recordings of real guitar tones played with different audio effects. We evaluate our approach on a new evaluation dataset with professional solo guitar performances that we composed and collected, featuring a wide array of tones, chords, and scales.

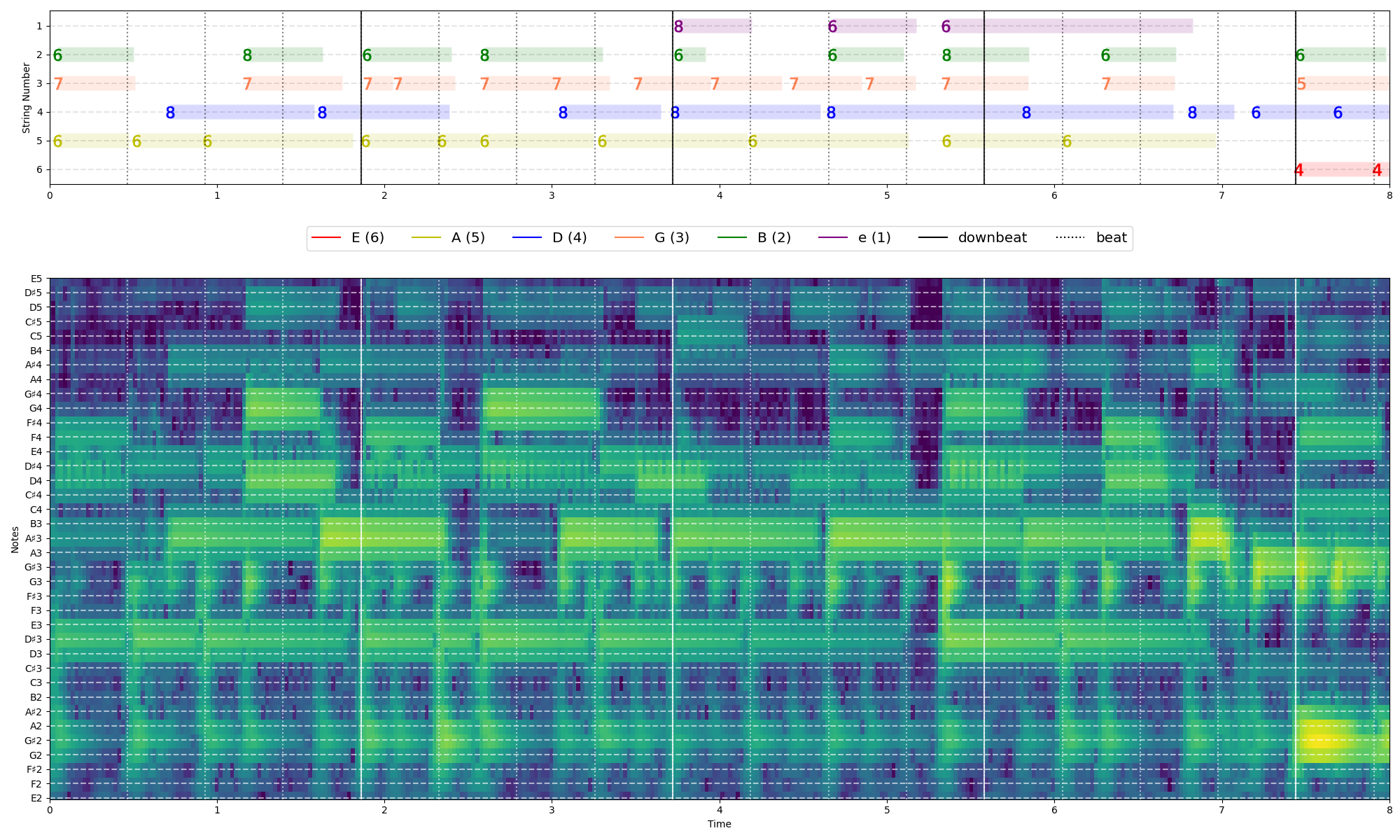

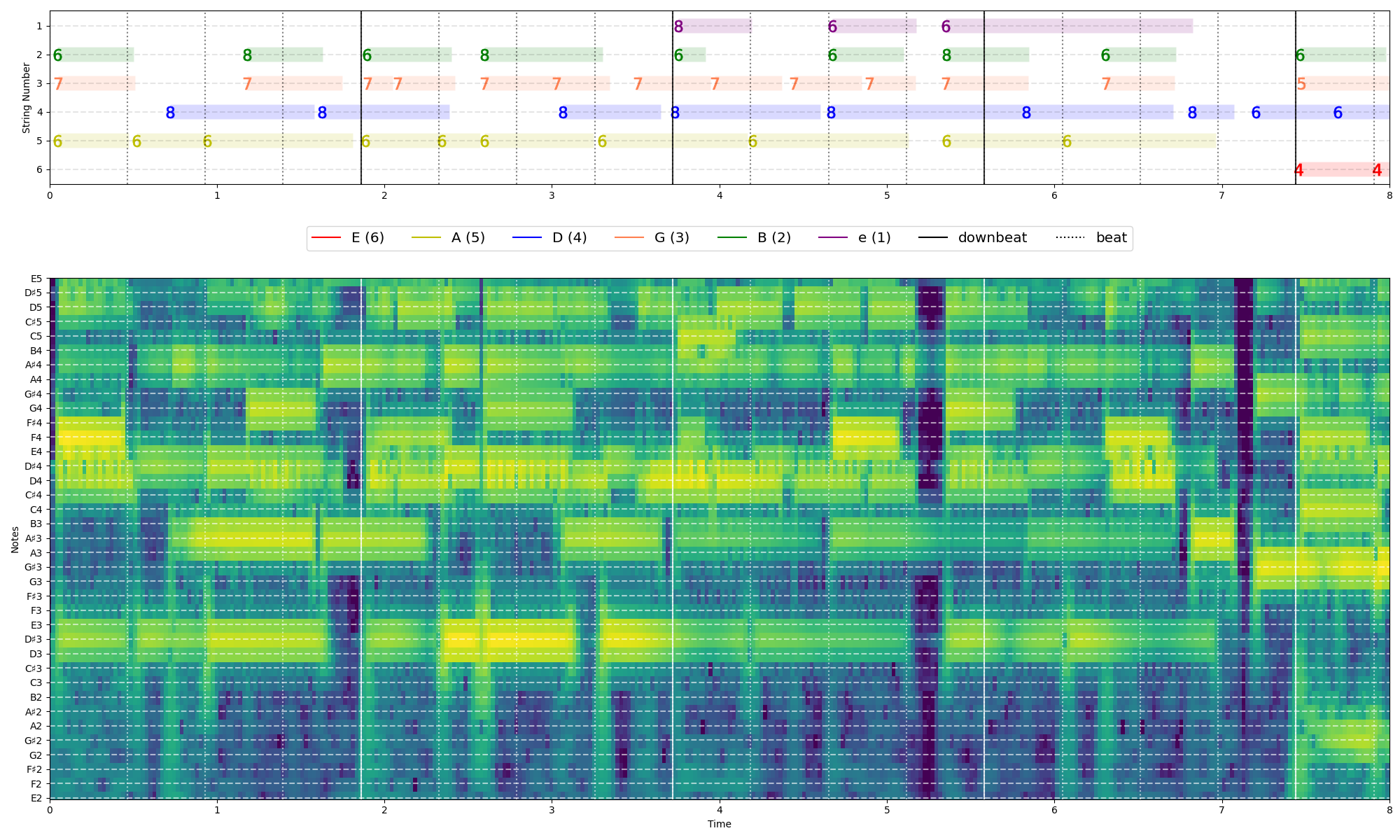

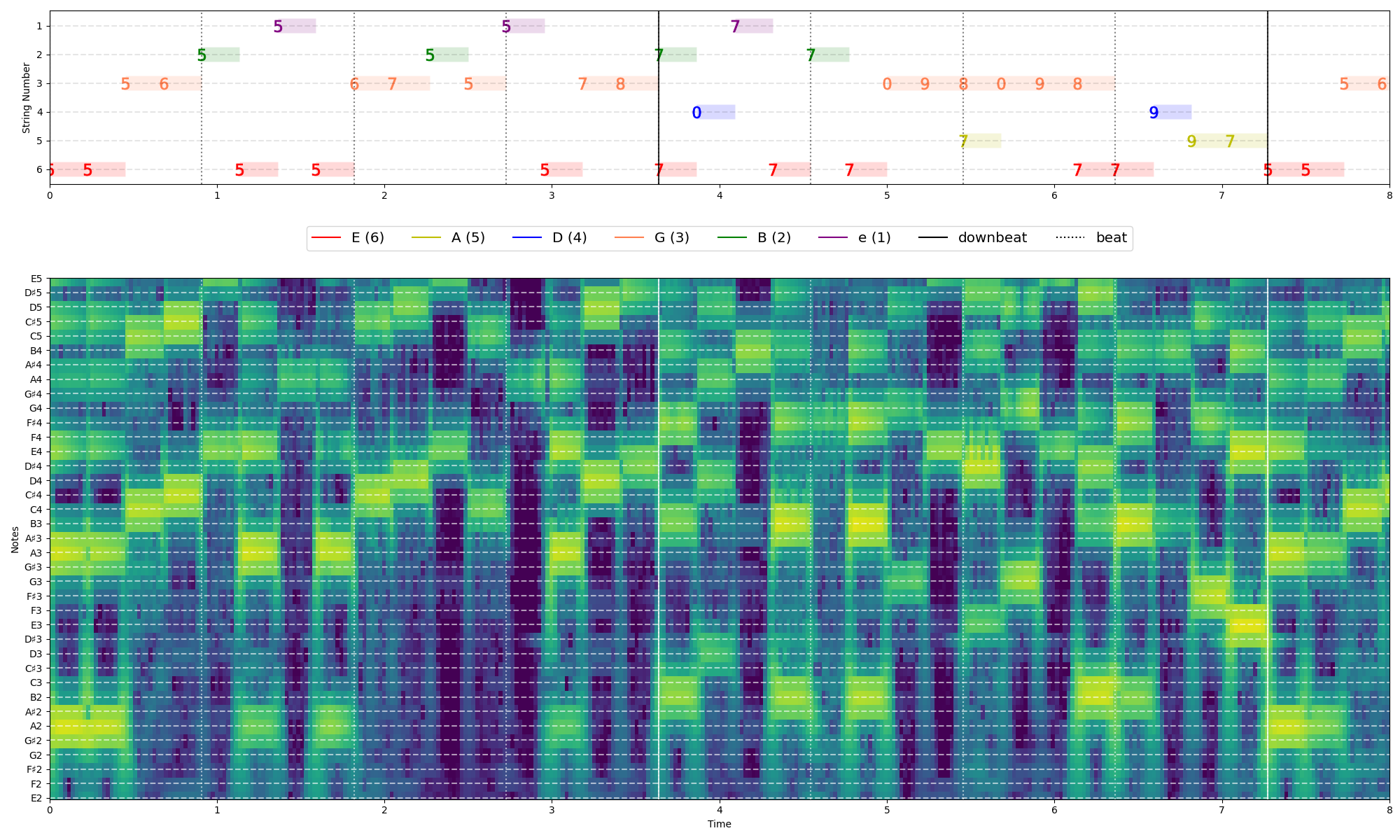

Our synthetic guitar solo performances, melodies and chords consist of notes played with varied guitar tones and audio effects, maximizing tone diversity. We achieve this by randomly selecting tones from a vast bank of examples when generating an audio track. This strategy aligns with our hypothesis that such diversity will enhance the model’s robustness. It allows the model to concentrate on pitch content and guitar string+fret inference, while disregarding specific timbre qualities. Hear a comparison of GuitarSet to GuitarSetFx and GuitarProFX below and see the CQT spectrogram of their early seconds!

GuitarSet Track

GuitarSetFx track

GuitarProFx Track

EGSet12 is a new evaluation set with twelve original solo electric guitar performances (31.65 seconds avg. duration, totaling 379.8 seconds). These pieces were composed by a professional musician and guitar player for this project, showcasing the full tonal range of the electric guitar across diverse melodies and chord complexities. EGSet12 encompasses a broad spectrum of styles, including pop, funk, jazz and twelve-tone, reflecting varied tonalities, keys, rhythms, and modes. Hear some samples below!

Track 02

Track 06

Track 09

The following results show increased model robustness on multi-pitch and tablature prediction metrics via our proposed method.

| Multi-pitch estimation | Tablature estimation | |||||||

|---|---|---|---|---|---|---|---|---|

| F1 | P | R | F1 | P | R | TDR | ||

| TabCNN[1] | 0.638±0.060 | 0.819±0.080 | 0.530±0.067 | 0.447±0.071 | 0.565±0.089 | 0.375±0.067 | 0.695±0.075 | |

| + GuitarSetFX | 0.740±0.055 | 0.835±0.085 | 0.679±0.052 | 0.557±0.088 | 0.619±0.100 | 0.518±0.084 | 0.755±0.106 | |

| + GuitarProFX | 0.719±0.061 | 0.839±0.082 | 0.647±0.068 | 0.585±0.084 | 0.658±0.073 | 0.541±0.087 | 0.819±0.075 | |

Table 1: TabCNN performance on EGSet12. Each cell is metric averaged across the twelve tracks (± denotes standard deviation). Top row: performance as trained by Wiggins & Kim. Bottom rows: performance when training data includes simulated tracks.

Figure 1: Each column is a two-second EGSet12 excerpt, comparing predictions made by models trained using GuitarSet, with and without GuitarProFX, against ground truth. Each circle is a tracked note on the guitar fretboard over time and vertical lines indicate musical beats.